Deep Learning Basics for Image Processing

Understanding the Role of Deep Learning in Modern Image Processing

The landscape of image processing has undergone a transformative shift over the past decade, moving from manual, handcrafted techniques toward powerful, data-driven deep learning algorithms. Traditionally, computer vision experts relied heavily on feature engineering — carefully designing filters and descriptors like SIFT or HOG to extract meaningful information from images. While these classical approaches produced commendable results in many scenarios, they often struggled to adapt well to new environments or handle complex visual variations. As a result, developers spent significant time fine-tuning these handcrafted features and performance gains were incremental at best.

The emergence of deep learning changed the game entirely. Instead of meticulously crafting features, neural networks learn them automatically from large datasets of labeled images. This shift became possible not only due to the widespread availability of massive image repositories but also because of substantial advancements in computational power. Modern Graphics Processing Units (GPUs) and specialized hardware accelerators have enabled the training of increasingly sophisticated neural network architectures — often composed of millions or even billions of parameters — in a fraction of the time it once required. Deep Convolutional Neural Networks (CNNs), for example, take raw image data as input and, through multiple layers of learnable filters, progressively distill complex shapes, textures and patterns into insightful high-level representations.

Thanks to these breakthroughs, deep learning now forms the core of a vast spectrum of image processing applications, spanning from fundamental image classification tasks to highly specialized domains. Simple image classification — such as determining whether a picture contains a dog or a cat — marked the beginning of this revolution. Today, we have powerful object detection systems capable of pinpointing and identifying multiple objects within a single frame, even under challenging conditions. Advanced techniques can segment images pixel-by-pixel, remove backgrounds automatically and recognize textual content through Optical Character Recognition (OCR). Industry-specific solutions have also emerged, including brand mark and logo recognition, which helps companies maintain brand integrity and analyze consumer media; and other niche applications like alcohol label detection, NSFW content filtering and face recognition. This expanding ecosystem of capabilities highlights deep learning’s adaptability and robustness, showcasing how it continues to reshape our approach to visual intelligence.

In essence, the rise of deep learning in image processing is about more than just improved accuracy. It’s about unlocking new realms of possibility — creating versatile systems that learn patterns autonomously, adapt quickly to new tasks and ultimately offer innovative solutions across industries. As deep learning techniques mature and computing power continues to grow, we can expect these models to become ever more efficient, opening doors to endless opportunities for businesses, developers and researchers alike.

Key Foundations: Neural Networks and Convolutional Layers

At the core of deep learning’s success in image processing are neural networks — specifically, a class known as Convolutional Neural Networks (CNNs). While traditional neural networks learn from raw, flattened pixel data, CNNs excel by leveraging a unique architecture inspired by the human visual system. Instead of processing every pixel independently, CNNs “look” at images through small filters (or kernels) that slide over the input, capturing local patterns and building increasingly complex representations of what they see.

Convolutional Layers and Feature Extraction

The hallmark of a CNN is the convolutional layer. Here, the network applies a set of learnable filters to small, localized regions of the image. Early layers of a CNN often detect basic visual signals — edges, gradients or simple geometric shapes. As the network’s depth increases, subsequent layers combine these rudimentary features into more intricate patterns, textures and object parts. By the time you reach the deeper layers, the network forms a high-level understanding of entire objects or important elements within the scene, allowing it to perform tasks like categorizing images or pinpointing objects with remarkable precision.

This hierarchical approach to feature extraction enables CNNs to generalize extremely well. Rather than relying on handcrafted features, the model learns patterns directly from the data. This makes CNNs highly adaptable to different kinds of images — from natural photographs and medical scans to microscopic images and even satellite imagery — because the network can tailor its internal filters to suit the nuances of any domain.

Common Architectures and Their Use Cases

Over the years, numerous CNN architectures have emerged, each designed with a specific purpose in mind. Some well-known examples include:

LeNet: One of the earliest successful CNNs, LeNet laid the groundwork for modern architectures. It’s simple and efficient, often used for basic tasks like recognizing handwritten digits (e.g., classifying the digits in a postal code).

AlexNet and VGG: These pioneering models brought deep learning into the spotlight for image classification. AlexNet popularized larger, deeper networks trained on GPUs, while VGG’s straightforward architecture of stacked convolutional layers became a staple benchmark for further research. They remain go-to options for foundational experiments and academic exploration.

ResNet: Introduced the concept of “skip connections” to alleviate the problem of vanishing gradients, making it possible to train much deeper networks. Its robust performance makes ResNet a favorite for a wide range of applications, including object detection and image segmentation.

MobileNet and EfficientNet: Designed for efficiency, these models prioritize smaller size and lower computational cost. They’re particularly suited for deploying on mobile devices or edge computing platforms, where processing resources and energy consumption are limited.

Different tasks call for different architectures. For instance, if your goal is to classify images at scale on consumer devices, you might lean toward a lightweight model like MobileNet. If you’re working on complex image segmentation or object detection projects, a deeper architecture like ResNet or a specialized model equipped with attention mechanisms may provide the best results.

Bringing It All Together

By understanding the fundamental building blocks of CNNs and the advantages of convolutional layers, developers and researchers can more effectively design, optimize and deploy image processing solutions. As you dive deeper into this domain, you will find an array of model architectures and training strategies to choose from. With the right combination, CNNs can transform raw image data into meaningful insights, empowering cutting-edge applications in computer vision and beyond.

Preparing and Preprocessing Your Image Data

Before a deep learning model can deliver accurate and reliable results, it needs a well-structured, high-quality dataset. In many ways, the success of a deep learning project hinges on the thoroughness and care taken during the data preparation phase. By following best practices — such as thoughtful labeling, systematic dataset splitting and strategic preprocessing — you’ll set a solid foundation that allows your model to learn and generalize effectively.

Building a High-Quality Dataset

The first step is ensuring that your dataset is both representative and well-organized:

Labeling and Annotation:

Precise, consistent labeling is paramount. If you’re classifying images of different dog breeds, for example, every image must be correctly tagged with the right breed. For object detection or segmentation tasks, accurate bounding boxes and masks are essential. Investing time in meticulous annotation (possibly using specialized annotation tools) pays off later, reducing noise in the dataset and increasing overall model performance.Dataset Splitting:

Once your images are annotated, divide your dataset into three parts: a training set, a validation set and a test set.Training Set: The bulk of your data, used to teach the model underlying patterns.

Validation Set: A smaller portion of data used to tune model hyperparameters, preventing overfitting.

Test Set: A final, unseen portion of data that provides a realistic measure of how well your model generalizes to new information.

Proper splitting ensures that your model is evaluated on images it has never seen before, offering an unbiased estimate of its real-world performance.

Preprocessing Techniques for Robust Models

Raw images are often inconsistent in terms of resolution, lighting and color distribution. Preprocessing steps help create a level playing field for your model to learn effectively:

Normalization and Standardization:

Different images can have drastically different brightness levels or color intensities. Normalizing pixel values — commonly scaling them to a range between 0 and 1 or standardizing them to zero mean and unit variance — ensures that all images are on a similar scale. This helps the model converge faster and improves generalization.Resizing and Cropping:

To train a CNN, all input images typically need to be the same size. Resizing images to a uniform resolution and, if necessary, cropping out extraneous parts of the frame ensures that your model focuses on the relevant features. Choose a resolution that balances computational feasibility with sufficient detail.Data Augmentation:

Data augmentation techniques — such as random flips, rotations, zooms or slight color shifts — help your model become more resilient to real-world variations. This artificially increases the size of your training set and teaches the model to cope with changes in viewing angle, lighting conditions and other naturally occurring differences. In turn, your model becomes more robust, delivering better performance on new, unseen data.

Managing Data at Scale

As projects grow in complexity, data management becomes increasingly important:

Consistent Data Formats and Storage:

Maintaining consistent naming conventions, directory structures and file formats (e.g., using common formats like JPEG or PNG) simplifies future updates and retrieval. This discipline reduces confusion and speeds up development cycles.Version Control for Datasets:

Much like source code, datasets evolve over time. Perhaps you’ve added new classes, improved annotations or collected additional images. Keeping track of these changes with dataset version control tools ensures that you can always revert to or compare different dataset versions. This practice is especially critical when troubleshooting performance regressions or repeating experiments for reproducibility.

By prioritizing the quality and integrity of your data, you lay the groundwork for more effective training, faster experimentation and smoother scaling. Good dataset preparation practices not only save time and effort down the line but ultimately translate into higher-performing deep learning models — models that truly unlock the potential of image processing tasks.

Common Deep Learning Applications in Image Processing

One of the most exciting aspects of deep learning in image processing is the breadth of applications it can power. What began as a quest to identify cats and dogs in images has blossomed into a diverse landscape of capabilities serving a multitude of industries. By understanding these core tasks and emerging niches, businesses and developers can more strategically leverage deep learning to solve real-world problems.

Core Image Processing Tasks

Three foundational tasks form the bedrock of many modern computer vision solutions:

Image Classification:

At its core, image classification tasks ask: “What is in this image?” Deep learning models, particularly Convolutional Neural Networks (CNNs), have achieved remarkable accuracy in recognizing objects, animals, products and other categories. Whether it’s filtering images by content, sorting personal photo libraries or automating quality checks on production lines, image classification stands as a starting point for countless image processing solutions.Object Detection:

Object detection goes beyond classification by pinpointing the exact location of objects within an image. Advanced models can draw bounding boxes around specific objects, count the number of instances and even track them through video frames. This technique is crucial in applications like autonomous driving (detecting pedestrians, cars, road signs), security surveillance (identifying intruders) and retail analytics (monitoring products on shelves).Image Segmentation:

Image segmentation refines the understanding of a scene by analyzing each pixel. Instead of just stating what objects are present, segmentation delineates the precise shape and boundaries of every object. This detailed breakdown enables tasks like medical image analysis (isolating tumors or organs in scans), geographic information systems (mapping land usage) and high-level photo editing (selectively adjusting parts of an image without affecting others).

Emerging and Specialized Applications

As deep learning continues to advance, more specialized and novel image processing capabilities are emerging, offering fine-tuned solutions to complex industry challenges:

Automated Background Removal:

Models can now seamlessly remove backgrounds from images, isolating subjects and enabling clean, professional visuals without manual editing. This technology saves time for e-commerce stores displaying product images, photographers enhancing their portfolio and content creators crafting eye-catching social media posts.Image Anonymization:

With privacy regulations tightening worldwide, the ability to anonymize faces, vehicle license plates or other identifying features in images is vital. Deep learning-driven anonymization tools help ensure compliance with data protection laws and maintain user trust in surveillance, ride-sharing and other data-sensitive fields.Logo and Brand Mark Recognition:

Identifying brand logos within images fuels competitive analysis, brand visibility tracking and marketing intelligence. From analyzing user-generated social media content to auditing product placements in TV broadcasts, this application empowers companies to better understand their brand exposure.Alcohol Label Recognition and NSFW Detection:

Specialized recognition tools now can discern between different types of alcohol labels, aiding in inventory management, product recommendations and retail analytics. Similarly, NSFW (Not Safe For Work) detection filters out inappropriate content. Both applications demonstrate deep learning’s ability to adapt to very specific domains, ensuring content remains tailored and compliant for particular use cases.

Extracting Text from Images with OCR

Optical Character Recognition (OCR) is another crucial application that involves translating visual text into machine-readable formats. For retail and logistics industries, OCR streamlines processes like invoice scanning, product cataloging and automated data entry. In the broader business ecosystem, OCR enables translation of signboards, digitization of documents and extraction of printed details from labels. By combining OCR with other image processing techniques organizations can create end-to-end automated pipelines that transform raw, unstructured visual data into actionable insights.

From foundational techniques like classification and segmentation to cutting-edge applications like anonymization and NSFW detection, the world of deep learning in image processing is evolving at an astounding pace. As newer models continue to emerge, their ability to understand, manipulate and interpret visual information will only grow, bringing innovative possibilities to industries far and wide.

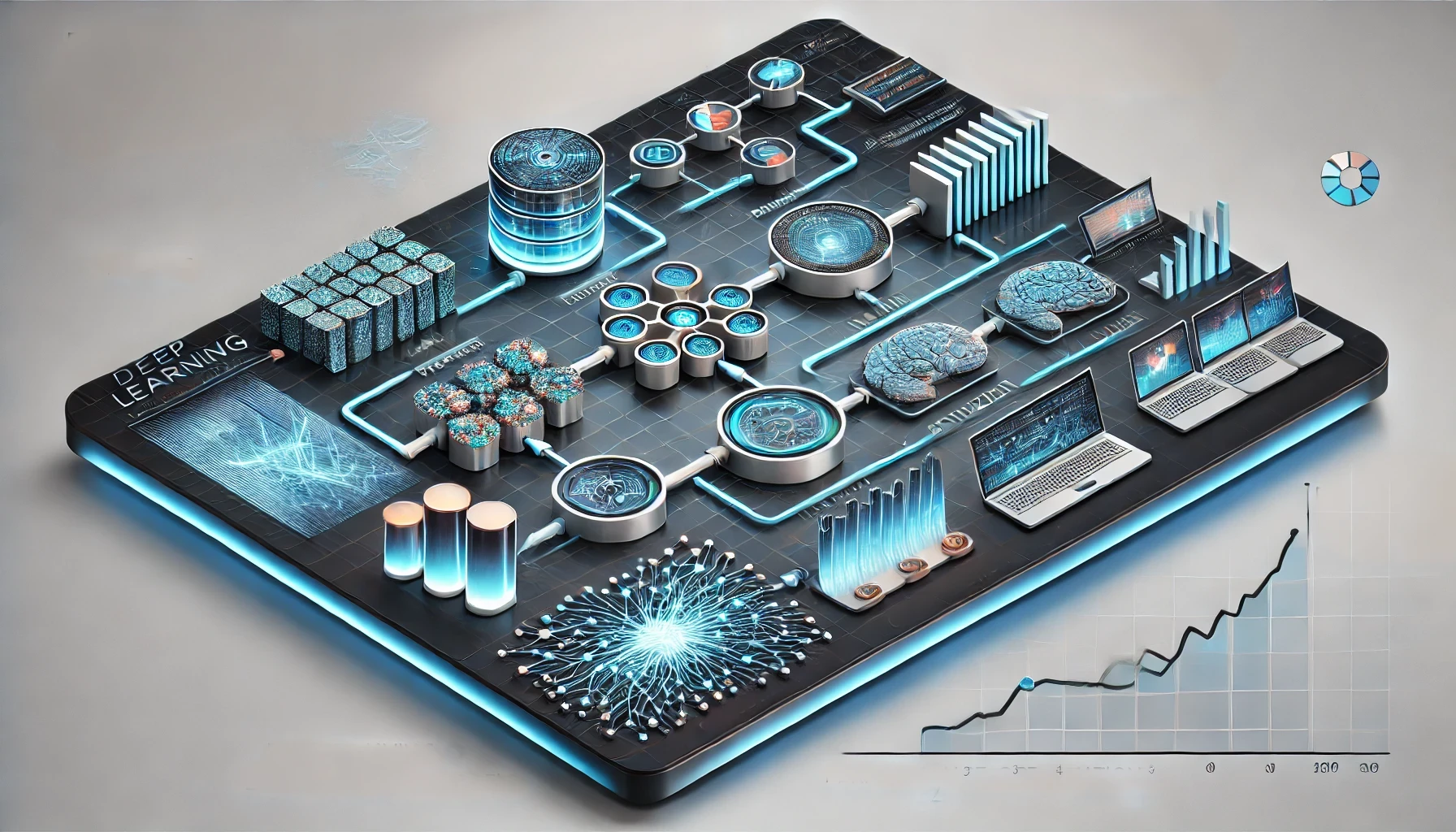

From Training to Inference: Implementing and Optimizing Models

Building a successful deep learning model for image processing is a journey that stretches well beyond writing a few lines of code. It involves several careful steps, from the initial training process to validating the model’s performance and finally optimizing it for real-world use. Each phase requires attention to detail, strategic decision-making and a keen understanding of best practices to ensure your model performs efficiently, accurately and consistently.

Training Fundamentals: Hyperparameters and Transfer Learning

The training process transforms raw data and a chosen model architecture into a powerful, domain-specific solution. This stage often involves:

Hyperparameter Tuning:

Hyperparameters — such as learning rate, batch size or the number of layers in your model — significantly influence both the speed and quality of learning. Finding the right combination often requires experimentation and iterative testing. Techniques like grid search, random search or more advanced methods like Bayesian optimization help streamline this process. By honing in on the optimal hyperparameters, you can achieve improved accuracy with fewer training iterations, ultimately saving both time and computational resources.Transfer Learning for Efficiency:

Training a complex model from scratch can be time-consuming and data-intensive. Transfer learning offers a shortcut by starting with a model already trained on a large, diverse dataset (such as ImageNet). You then fine-tune this pre-trained model on your specific dataset, leveraging the general visual features it has already learned — such as detecting edges, textures and simple shapes. This approach accelerates convergence, reduces the need for massive amounts of labeled data and ultimately speeds up experimentation.

Validation and Testing for Generalization

A model that performs perfectly on the training set but fails to handle new images is not very useful in real-world applications. Ensuring generalization — the model’s ability to handle unseen scenarios — is essential:

Validation Set:

During training, monitoring performance on a validation set helps guide hyperparameter tuning and model updates. When validation accuracy plateaus, starts to drop or diverges from training accuracy, it’s often a sign of overfitting or suboptimal settings. Continuous validation fosters a feedback loop, enabling you to adjust training strategies on the fly.Test Set:

After finalizing hyperparameters and model architecture, the test set provides a one-time evaluation of the model’s true performance on unseen data. Think of this step as your final exam — no more adjustments, no retakes. A robust test set outcome verifies that your model’s accuracy, precision and recall metrics hold up under real-world conditions.

Optimizing for Inference: Speed and Scalability

Once you’ve trained and validated your model, the next challenge is deploying it efficiently. Inference — using the trained model to predict outcomes for new inputs — must be optimized for speed, resource usage and scalability. This is especially crucial for applications that demand low-latency responses or run on resource-constrained devices:

Model Compression Techniques:

Techniques like pruning (removing unnecessary parameters), quantization (reducing numerical precision) and knowledge distillation (training a smaller model to mimic a larger one’s predictions) help shrink model size. These steps not only reduce memory footprint but also boost inference speed without severely degrading accuracy.Hardware Acceleration and Deployment Platforms:

Harnessing hardware-accelerated environments — such as GPUs, TPUs or specialized AI chips — can deliver real-time inference on servers or edge devices. Many frameworks and libraries now support just-in-time (JIT) compilation or leverage low-level optimizations to ensure that your model can handle large-scale inference workloads efficiently.Cross-Platform Compatibility:

With the proliferation of mobile devices, IoT sensors and embedded systems, ensuring your model runs smoothly across multiple platforms is vital. Tools like TensorFlow Lite, ONNX Runtime or PyTorch Mobile convert and optimize models for edge deployment. Such tailored solutions help deliver high-quality predictions anywhere — whether it’s a smartphone scanning product labels or a security camera detecting unauthorized visitors.

From fine-tuning hyperparameters to scaling up inference for millions of users, each stage of model development and deployment plays a critical role. By investing time and effort into these aspects, you not only achieve peak model performance but also future-proof your workflows — ensuring that your image processing solutions remain fast, accurate and impactful as your business and user base continue to grow.

Scaling Up: Using APIs and Custom Solutions

When it comes to bringing deep learning image processing systems into production, the “build it all in-house” approach isn’t always the most efficient. Deploying a robust, scalable solution involves significant engineering efforts — from infrastructure management to model updates and performance optimizations. For many businesses, especially those that are new to deep learning, leveraging cloud-based APIs and custom development services offers a more strategic route.

Rapid Integration with Cloud-Based APIs

Cloud-based image processing APIs serve as plug-and-play building blocks, enabling companies to integrate advanced deep learning capabilities into their applications without the need to start from scratch. By sending images to an API endpoint, developers can access functionalities like object detection, image classification, background removal or OCR — often within minutes. This approach:

Speeds Up Time-to-Market:

Instead of waiting months to train and deploy a custom model, teams can incorporate out-of-the-box solutions that deliver immediate results. This rapid integration is ideal for lean startups or established enterprises looking to test concepts quickly.Simplifies Infrastructure Management:

The API provider handles scaling, updates and hardware optimization. Developers are freed from the burden of provisioning servers, managing complex GPU clusters or fine-tuning model deployments. As your application grows and your user base expands, the service scales seamlessly behind the scenes.Offers Consistent Performance and Reliability:

Because API providers continuously improve and maintain their models, you benefit from ongoing enhancements in speed and accuracy without lifting a finger. This predictable performance builds trust with end-users and allows you to focus on delivering value rather than troubleshooting technical details.

Tailored Solutions for Unique Requirements

While ready-to-use APIs solve a broad range of common scenarios, some projects call for more specialized attention. In such cases, companies like API4AI don’t just offer off-the-shelf services — they also provide custom development options. A custom solution might involve training a model on a highly specialized dataset, refining performance metrics for unusual conditions or integrating additional image data sources into a unified pipeline. This tailored approach allows you to:

Address Niche Use Cases:

Not all image processing challenges fit neatly into standard, generic models. Custom solutions let you tackle industry-specific tasks, from rare product categorization to specialized quality inspections, ensuring that your application stands out in a competitive market.Optimize for Specific Platforms and Constraints:

Whether your deployment environment involves mobile devices with limited memory or edge sensors requiring low-latency inference, custom solutions can be fine-tuned to run efficiently under unique constraints. This approach maximizes performance, user experience and cost-effectiveness.Leverage Domain Expertise:

Collaborating with experts who understand state-of-the-art deep learning techniques, industry best practices and emerging research can significantly speed innovation. Their insights guide you through architectural decisions, data sourcing, labeling approaches and compliance considerations — all tailored to your business objectives.

Focusing on Innovation Instead of Reinvention

By relying on established APIs and expert-led custom services, teams can sidestep the complexity of building everything in-house. Instead of struggling with the fine details of training pipelines, infrastructure orchestration or hyperparameter tuning, your developers can invest their creativity in new features, user experiences or integration opportunities. This approach is all about leveraging the expertise of specialized solution providers to accelerate time-to-value, reduce overhead and ensure that your image processing capabilities evolve in lockstep with changing industry demands.

In short, tapping into cloud-based APIs and partnering on custom solutions grants you the freedom to focus where it matters most: delivering cutting-edge products, delighting customers and outpacing competitors — all while harnessing the transformative power of deep learning for image processing.

Future Trends and Conclusion

As the field of deep learning in image processing continues to evolve, researchers and practitioners are charting new territories that promise even greater capabilities and efficiencies. Recent breakthroughs and emerging directions hint at a future where machine vision grows more autonomous, flexible and human-like in its understanding of the visual world.

Emerging Research Directions

Self-Supervised and Unsupervised Learning:

Traditionally, deep learning models have relied on large sets of manually labeled images — an expensive and time-consuming prerequisite. Self-supervised and unsupervised learning techniques aim to reduce this burden by enabling models to learn from raw, unlabeled data. By discovering underlying patterns without explicit human guidance, these methods can rapidly adapt to new domains and yield more generalizable models.Multimodal and Transformer-Based Models:

Another exciting avenue is the integration of multiple data modalities, such as combining images with text, audio or sensor readings. Transformer-based models and multimodal architectures are already demonstrating remarkable progress, empowering systems to interpret images alongside captions, understand scenes paired with spoken instructions or correlate visual data with structured metadata. This holistic understanding enhances everything from content recommendation engines to advanced robotics.More Efficient Architectures and Hardware Acceleration:

As deep learning gains a foothold in edge devices, low-power sensors and mobile applications, demand for efficient, compact architectures continues to grow. Techniques that reduce model size, increase inference speed or adapt gracefully to resource constraints ensure that powerful image processing capabilities can run reliably anywhere — from data centers to handheld gadgets. Meanwhile, specialized AI hardware such as GPUs, TPUs and dedicated inference accelerators make real-time image analysis increasingly accessible.

Responsible AI and Growing Regulatory Demands

With great technological progress comes an increased focus on the ethical and regulatory dimensions of AI. Models that process images must respect privacy, treat all individuals fairly and provide transparent, explainable outcomes. Emerging regulations and industry standards require developers to consider these factors at every stage — choosing training data carefully, evaluating bias and implementing strong data protection policies. Responsible AI practices not only safeguard end-users and ensure compliance but also enhance the overall credibility and trustworthiness of image-based applications.

Conclusion: Embrace the Transformative Power of Deep Learning

Deep learning’s influence on image processing is profound — no longer limited to research labs, it drives innovation in fields as diverse as retail analytics, healthcare diagnostics, automotive safety and entertainment. By applying the foundational concepts we’ve discussed and staying informed about emerging trends, businesses and developers can unlock new opportunities to differentiate themselves, streamline operations and elevate user experiences.

Moreover, as the ecosystem of APIs and custom services — offered by providers like API4AI — continues to expand, it has never been easier to integrate cutting-edge capabilities into your workflows. Instead of starting from scratch, teams can access powerful tools and partner with experts, accelerating progress and freeing time to focus on what matters most: delivering value and innovation to customers.

Ultimately, the future of deep learning in image processing is bright, full of promise and well within reach. By remaining curious, embracing responsible AI practices and leveraging specialized solutions, you can harness this remarkable technology to shape a more intelligent and visually literate world.